By Bob Reselman, Software Developer and Technology Journalist

If your company is not testing its software continuously, you’re on the road to doom. Given the increasing demands of the marketplace to have more software at faster rates of release, there’s no way a company can rely on manual testing for a majority of its quality assurance activities and remain competitive. Automation is essential to the CI/CD process, and especially usability testing.

Competitive companies automate their testing process. That’s good news. The bad news is that typically the scope of automation is limited to user behavior testing that’s easy to emulate — for example, functional and performance testing. The more complicated tests — those centered around human factors — are typically left to efficiency-challenged manual testing.

The result: a company can have lightning-fast tests executing in some parts of the release process, only to be slowed to a snail’s pace when human-factors testing needs to be accommodated. There are ways by which human-factors testing can be automated within the CI/CD process. The trick is to understand where human intervention in the testing process is necessary; building automated processes accommodating those boundaries.

The four aspects of software testing

To understand where human-factors testing starts/stops, it’s useful to have a conceptual model by which to segment software testing overall. One model separates software testing into four aspects: functional, performance, security, and usability testing (Fig. 1). Table 1 describes each.

The four parts of software testing:

Functional, Performance, Security, Usability

Definition of the four parts of software testing

- Functional: Verifies that the software behaves as expected relative to operational logic/algorithmic consistency.

Example: Unit & component tests; integration & UI testing - Performance: Determines a software system’s responsiveness, accuracy, integrity, and stability under particular workloads, operating environments.

Example: Load, scaling, and deployment tests - Security: Verifies that a software system will not act maliciously, is immune to malicious intrusion.

Example: Penetration, authentication, and malware injection tests - Usability: Determines how easy it is for a given community of users to operate (use) a particular software system.

Example: User interface task efficiency, information retention, and input accuracy testing

Data-driven vs. human-driven tests

Given the information provided above, it makes sense that functional, performance, and security testing gets most of the attention when it comes to automation. These tests are machine centered and quantitive — data in/out, all of which can be easily machine-initiated running under script. Things get more complicated with usability testing.

Usability testing requires random, gestural input that can be provided only by a human. As such, creating an automated process for this type of test is difficult. It’s not just a matter of generating data and applying it to a web page with a Selenium script. Human behavior is hard to emulate via scripts. Consider the process of usability testing a web page for optimal data efficiency. The speed at which a human enters data will vary according to the layout and language of the page as well as the complexity of data to be registered. We can write a script that assumes the human behavior about data entry, but to get an accurate picture, it’s better to have humans perform the task. After all, the goal of a usability test is to evaluate human behavior.

To be viable in a continuous integration/continuous delivery (CI/CD) process, testing must be automated. The question then becomes, how can we automate usability and other types of human-factors testing when, at first glance, they seem to be beyond the capabilities of automation? The answer: as best we can.

Automate as much as possible

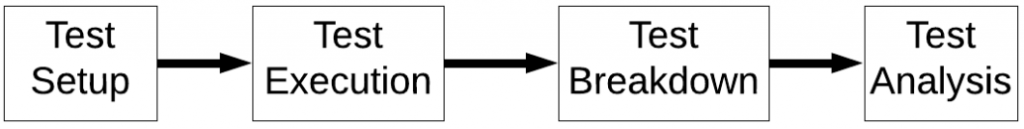

No matter what type of testing you are performing, it’s going to be part of a process that has essentially four stages: setup, execution, teardown, and analysis (Fig. 2).

Fig. 2: The four stages of a software test

When it comes to creating efficiency in a CI/CD process, the trick is to automate as much, if not all, of a given stage as possible (operative words being “as possible”). Some types of tests lend themselves well to automation in all phases; others will not. It’s important to understand where the limits of automation in a given test throughout the four steps are.

Full automation is achievable during functional and performance tests

For functional and performance testing, activity automation of all four stages is straightforward. You can write a script that (1) gathers data, (2) applies it to a test case, and (3) resets the testing environment to its initial state. Then you can make the test results available to another script that (4) analyzes the resultant data, passing the analysis to interested parties.

Security testing requires some manual accommodation

Security testing is a bit harder to automate because some of the test setup and teardown may involve specific hardware accommodation. Sometimes this consists of nothing more than an adjustment of the configuration settings in a text file. Other instances might require the human shuffling of routers, security devices, and cables about in a data center.

Usability tests have a special set of challenges

Usability testing adds a degree of complexity that challenges automation. Test setup and execution requires human-activity coordination. For example, if you’re conducting a usability test on a new mobile app, you need to make sure that human test subjects are available, can be observed, and have the proper software installed on the appropriate hardware. Then each subject has to perform the test, usually under the guidance of a test administrator. All of this requires a considerable amount of coordination effort that can slow down the testing process when done manually.

Although the actual execution of a usability test needs to be manual, most of the other activities in the setup, teardown, and analysis stages can be automated. You can use scheduling software to manage a significant portion of the setup (e.g., finding, then coordinating the invitation of test subjects to a testing site). Also, automation can be incorporated into the configuration of the applications and hardware needed.

In terms of observing test subjects, you can install software on the devices under test that will measure keyboard, mouse, and screen activity. Some usability labs will record test-subject behavior on video. Video files can then be fed to AI algorithms for pattern recognition and other types of analysis. There’s no need to have a human review every second of recorded video to determine the outcome.

The key: home in on those test activities that need to be performed by a human, and automate the remaining tasks. Isolating manual testing activities into a well-bounded time box will go a long way toward making usability testing in a CI/CD process more predictable and more efficient.

Putting it all together

Some components of software testing, such as functional and performance testing are automation-friendly. Others such as security and usability testing, require episodes of manual involvement, thus making test automation a challenge.

You can avoid having manual testing become a bottleneck in the CI/CD process by ensuring that the scope, occurrence, and execution time of the manual testing activities, particularly those around usability tests, are well known. The danger comes about when manual testing becomes an unpredictable black box that eats away at time and money — with no end in sight.

Bob Reselman’s profile on LinkedIn