What’s driving load testing in Agile and DevOps environments today? The ever-increasing share of digital technology is driving companies to accelerate the pace at which new services/features are released. At the same time, applications are offering richer functionalities with improved, more sophisticated user experience. The explosion of application reliability and speed is creating the need for greater complexity/intensity of the load testing practices behind them.

Research and development teams are faced with two challenges: the need to be able to test faster and more regularly. In response, they’re adopting Agile and DevOps methodologies. Some questions remain – how do I integrate load testing, predominantly a manual discipline, into shorter cycles? Where can I incorporate automation to support accelerated delivery goals?

Performance anomalies can be costly to resolve. They can have a significant impact on an application’s code structure and architectural foundation. Early load testing practices are a solution to minimize technical incident cost or to avoid a complete service failure for your users.

Before starting the load test processes and best practices, it’s important to think about the following such that you shape a forward-thinking plan:

What is load testing?

A load test is a type of non-functional test that is a subset of a performance test. It’s used to identify bottlenecks by simulating user queries from within an app or website and reproduces real-life app user scenarios. This makes it possible to detect potential malfunctions before publishing – giving developers time to make the software more robust before transferring to a live production environment.

In software engineering, load testing is often used for client and server apps as well as web, Intranet, and Internet apps.

Why is load testing necessary?

Load testing is a crucial component of the development process. It ensures that the application can handle the number/nature of requests it is likely to receive when live. Evaluating your app using a load testing tool helps ensure you will provide smooth user experience, and therefore, avoid negative user feedback which could ultimately impact your bottom line.

What are the objectives of load testing?

The goal of a website is usually to attract the most significant number of visitors/buyers as possible. To support this, companies set aside a budget to run advertising campaigns. However, if the site or app experiences problems, particularly when faced with large amounts of traffic or requests, no advertising will fix the issues that will likely ensue.

Load testing must seek to understand:

- The number of users the app/website can support at any one time

- The maximum operational capability of the app/website

- Whether the current infrastructure can sufficiently support a user’s interaction with the app/website without a hiccup

- The durability of the app/website when challenged by peak user load

What’s the difference between a load test and a stress test?

Load tests help you understand the limitations of an app/website by simulating user behavior during normal and high loads. Whereas stress tests, also known as resistance tests, look to determine the robustness of the system when those limits are exceeded.

Together, these tests provide a capability review of an app/website as to whether it can manage the actual loads it will likely encounter (with the stress test designed to dig a little deeper).

Load test

- The most common and widely used.

- Applies normal levels of activity to see if the app/website works properly under normal conditions.

- Ensures apps can handle normal levels of activity without issue.

Stress test

- Goes further than the load test.

- Applies intense levels of activity to “stress” the app/website – taking it beyond its normal operational capability (often to breaking point).

- Understands the aspects of the app that fail (in the order they appear). By testing to its breaking point, this test shows how the app/website will reset itself post-failure

10 ways to optimize load testing

- Automate performance tests: Automating performance testing is undoubtedly the best way to make it as efficient as possible. Automation lets you pre-define a schedule of test sets to run on their own according to a plan. In the context of Continuous Integration (CI), automation is essential. Outside of CI, it prevents regressions and increases the overall amount of testing you can do.

- Generate virtual users: A realistic load test includes virtual users, each running a predefined scenario. Apps can’t differentiate between virtual and real users, making this an effective way to identify where problems may arise (using virtual users so that actual users never encounter such errors).

- Set up unit performance tests: Before creating load tests, you should set up unit performance tests, which can be done when that particular section of code is ready. If you’re following a test-driven development (TDD) approach, you’ll need to set performance expectations and create executable tests for each code module.

- Adopt a modular approach: Automating unit performance testing allows you to create a unit test library, reasonably quickly. You then have many different modules which can be assembled into several test scenarios. This lets you generate complex interactions that test the system thoroughly and realistically. Meanwhile, modularity helps you better understand the system as a whole.

- Do exploratory tests: Exploratory testing is an approach that prescribes testing sections of your app before it’s complete. This helps you explore the app/website without setting up specific scenarios. Instead, searching for particular points of interest to test. Tests can then be built as the exploration progresses.

- Test in the Cloud: Performance testing requires a significant amount of resources and can mean that regular testing of an app/website isn’t always possible. Integrating the Cloud into your testing process is one solution as it lets you create heavy loads without internal system infrastructure impact. Also, when testing in the Cloud, loads must travel through all of the network layers, load balancers, and security firewalls in the same manner any user accessing your app/website would. It’s even possible to geographically distribute the sources of load, making it an incredibly practical method to test realistically.

- Involve the whole team: To maximize the testing effort, you need to follow an approach that builds it into your development structure early and often incorporating all team members on board. Agile methodology is an excellent example of this – encouraging teams to communicate and collaborate to gain flexibility and responsiveness.

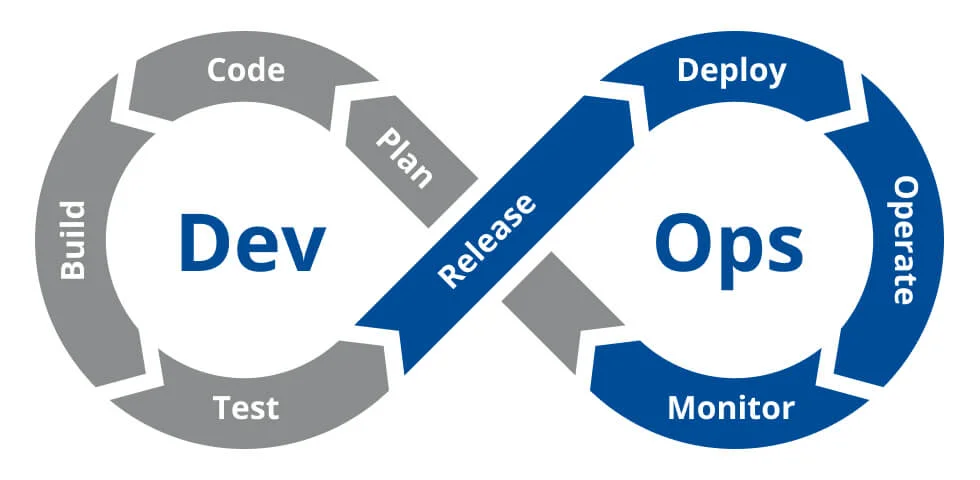

- Take a DevOps approach: Establishing a true partnership between development and operations teams makes testing much more impactful. It’s much easier for operational staff when they understand the basic principles of coding, and in the other direction it’s easier for developers to deliver a suitable product when requirements are crystal clear and everyone is working towards a common goal.

- Reuse scenarios to increase flexibility: Using work for multiple purposes is an effective step toward getting the most out of what you do. Specific testing tools, like NeoLoad, let you easily reuse scenarios. The same scenario used for load testing can be migrated to the production environment for performance monitoring. Test scenarios can also be shared between teams – significantly increasing the amount and types of testing you can do.

- Monitor KPIs: To execute relevant performance tests, it’s essential to define key performance indicators from the start, remaining focused on them throughout. They will guide and help you stay on track during development.

What are the prerequisites for performing load tests?

Know the infrastructure

Accurate and reliable tests are the result of testers who understand their infrastructure in its entirety. Otherwise, only partial analysis can take place, leaving you with incomplete and unreliable results. To combat this, everyone needs to bring the proper documentation to the table so that test engineers can perform tests under the best conditions.

Choose a testing tool

All load testing tools are not created equal, nor do they offer the same services. It’s important to ask the right questions of the tool before integrating it into your testing process:

- What are the expectations and requirements of the tool?

- What support (old and current technologies) is available?

- What level of scenario complexity is possible?

- Does it enable continuous testing?

- Can it be integrated into a continuous delivery pipeline?

Work in collaboration with the CI/CD pipeline

To gain an advantage in a highly competitive environment, companies need to find ways to deliver software faster. Nothing is more effective than an automated process of Continuous Integration (CI) or Continuous Deployment (CD).

For the automated testing to be effective, you need to understand the pipeline architecture inside out. Without this knowledge, supporting processes may lack consistency and cause problems with automated testing.

How should you load test?

Load testing involves a complex process with several steps:

- Creation of a dedicated test environment

- Definition of the load testing scenarios

- Determination of the load testing transactions: Data preparation, Virtual user volume identification, Understanding of users’ connection settings

- Running of test scenarios; results collection

- Results analysis and recommending app/website improvements

What are the advantages and disadvantages of load testing?

As with any tool, load testing tools have pros and cons.

Advantages

- Identifies malfunctions before software goes into production

- Improves system scalability

- Reduces system shutdown risk

- Cuts cost of failure(s)

- Provides a better user experience

Disadvantages

- Requires programming knowledge

- Generally, more expensive

What are the main tools for load testing?

NeoLoad

Description

Enterprise scale load testing platform designed for Agile and DevOps.

Advantages

Integrates with Continuous Delivery pipeline to support performance testing throughout the software lifecycle (from component testing to complete system load testing)

Disadvantages

Design for large scale Enterprise rather than for single user use case.

JMeter

Description

Open source load testing tool for analyzing and measuring the performance of a variety of services (focuses on web apps).

Advantages

Open source tool for performance testing.

Disadvantages

No support for Enterprise.

LoadRunner

Description

Existing desktop graphical interface load testing on traditional web apps.

Advantages

Sharing across your business, create multiple users and connect to projects.

Disadvantages

Three tools must be installed:

- VuGen (Virtual User Generator): a tool for creating and editing scripts.

- Controller: a tool to run and monitor tests.

- Analysis: a third to analyze the test – similar to a professional license of NeoLoad.

LoadNinja

Description

Load graphical interface and APIs.

Advantages

Simple real browser load generation only.

Disadvantages

Relatively immature app today. APIs are limited – unable to achieve half of what the TLN’s web APIs can do.

Load testing best practices for DevOps and Agile

Shift left and component testing – driving earlier load testing execution

What’s the upside of faster, increased testing within shorter cycles? How about having the ability to quickly identify a performance problem upstream during development so that you avoid the typical practice of complex/costly post-sprint correction. Load testing earlier, continuously, and automatically is the right approach.

Service Level Agreement (SLA) Management

Conducting load tests earlier starts with proper documentation of user stories, which should always include all associated performance requirements (similar to functional testing approach). This involves identification and documentation of Service Level Agreements (SLAs) and load tests results analysis in relation to performance indicators such as:

- DNS resolution speed

- Response time

- Time-to-last-byte

- System uptime

- The technical behavior of the infrastructure

A good rule of thumb when making sure performance is properly integrated into development work is to put SLAs in the user stories directly, as another dimension in your Definition of Done (DoD). In this case, a user story cannot be “completed” if the performance criteria are not met.

NeoLoad allows you to set custom SLAs. These thresholds are used to integrate load testing into the Continuous Integration process. It will condition a pass/fail test status, determining whether to continue the automatic build process. Learn more about NeoLoad.

Load Testing of Components

Validating code performance at the earliest stage means that testing starts well before the application is complete. Load tests should be run as soon as the most strategic components are available. The goal should be to load test API, web services and microservices representing the application’s life-giving organs.

NeoLoad is designed to make load testing as simple as possible. It is aimed at both specialist testers and developers and allows testing of APIs/components during the early stages of the development cycle.

Service Virtualization for Realistic Component Load Testing

There is an interdependence with which an application’s components interact. Isolated load tests of each component preclude performance validation of an assembled system. As a result, the component testing approach offers two options:

- Wait for the component availability of those consumed by the load test

- Implement service virtualization on components not yet available in order to make load tests more realistic, faster

NeoLoad interfaces with service virtualization tools such as Parasoft and SpectoLabs. Want to learn more? Check out our white paper, Using NeoLoad for Microservices, API, and Component Testing.

Automation of Load Tests and Non-regression Performance Tests

When unit load tests of components are defined, developers can launch them automatically with the Continuous Integration server (e.g., during nightly builds). Build after building; these tests can check the component’s performance trend over time; this is a non-regression performance test.

Using the pass/fail status of the tests, the Continuous Integration server can automatically decide whether the integration process can continue, or if it must be stopped for immediate issue correction.

Since the status definition of a load test is closely linked to SLAs, it is necessary to define them precisely to be able to block or authorize the next steps in the build process.

How to speed up “test-analysis-scenario update” cycles for load testing of assembled applications?

When the application’s components are assembled, integration testing is much more complex than unit load testing. In Agile environments, it is important to adopt the right strategy so that performance testing does not bottleneck the development cycle.

Building an effective strategy to hedge performance risk

It is not possible to test 100% of an application. So, it is essential to define the riskiest areas of focus for load testing depth and intensity. In practice, 10-15% of the most successful test scenarios will identify 75-90% of the major problems.

For proper risk diagnosis, you must have sufficient knowledge of the application’s architecture. Testers, application managers, and architects must work closely together to ensure this knowledge is shared.

Tools and practices responsible for accelerating the creation of complex load test scenarios

- Gray box testing for the most important components of your application

- Automating variabilization, correlation, and randomization

- Functional test scenarios (e.g., Selenium) to speed up the creation of load tests

With NeoLoad, you can import functional Selenium test scenarios for use during load testing. All you want to learn about NeoLoad’s features to design complex scenarios.

Ingredients of effective load test analysis

Agile developers need to understand the root of the performance issue for quick resolution. Diagnosis quality and analysis efficiency are essential load test contributions to the requirements which help build confidence.

- Actionable data synthesis (e.g., whether they can withstand decision support)

- Understanding of the role/skills of the individual using the load test report

- Use of APM/profiling tool to identify the piece of code responsible for performance regression

NeoLoad offers a test analysis capability allowing you to identify a performance problem root, leaving more time to solve quickly. Read more about test results analysis.

Automating the update of your load test scenarios

Updating load testing scripts can often represent >50% of the teams’ effort. As the application evolves, it is often necessary to re-write the test scenarios completely. Here’s how to accelerate, or even automate, this maintenance activity nearly in-full:

- Load test tool applied to detect code modifications; change part of the test

- Rely on functional test maintenance to fully automate the update

Use NeoLoad’s automatic user path update feature to reduce the time it takes to maintain your scripts by up to 90%. For more about NeoLoad’s automatic user path update.

Integration of load testing in DevOps toolchain for continuous testing

Collaborative load testing

Application performance is a concern for all members of the Agile team, not just those performing load testing services. It is important that your test results are accessible to everyone, at all times:

- Each team member must be able to consult the test reports

- It should be possible to monitor the performance measures in real-time during test execution (before the end of a load test, which can last several hours). Developers can start issue correction earlier.

With NeoLoad, all teams work together on the load test. Results are shared in a centralized database similar to real-time monitoring results during the tests. Learn more about collaboration capabilities.

The load testing platform’s interaction with your DevOps toolchain

To enable fast and efficient acceleration of Agile and DevOps processes, it is necessary to integrate your load testing tool with the other solutions within your DevOps chain:

- Incorporation into the Continuous Integration servers to automate load tests

- Assimilation with functional testing tools to measure user experience/automate scenario maintenance

- Alliance with APM tools to analyze tests at the code level

- To learn more, read our article about how to choose a load testing tool

NeoLoad offers ready-to-use integrations to facilitate successful toolchain interaction. You can create your custom integrations with NeoLoad’s API.

Essential production performance monitoring information most useful for load testing

Performance feedback gleaned during production with your APM tool is invaluable load testing approach refinement data. Armed with this insight, you can:

- Evaluate load levels observed in production to adapt the testing strategy, reproduce realistic conditions

- Measure actual service levels that condition SLAs in testing

- Identify risk areas in your application

Load testing to performance engineering

The art of load testing continues to evolve towards traditional performance engineering practices, yet application performance responsibility is still reserved for a small number of specialists today. This evolution, which will vary by business based on their performance testing philosophies/corresponding Agile/DevOps adoption, represents a profound shift in the methods, skills, and tools used by the tester. At the core, the skill set of the traditional performance tester requires expansion:

- Knowledge of how to understand and evaluate the application’s architecture

- Awareness of how to best incorporate automation into the toolchain

- Communication and collaboration skill advancement to become a trusted performance partner with others responsible for the performance

In the end, the “new” performance engineer becomes the protector of performance throughout the project lifecycle. Their key responsibilities include:

- Test strategy creation after execution of thorough risk analysis

- Load testing automation enablement

- Supporting developers with the creation of a unit load test scenario

- Monitoring of strategy implementation during production; establishment of an incident reporting process

Try NeoLoad, the most automated performance testing platform for enterprise organizations continuously testing from APIs to applications.